Since writing this a new set of stats has come out (yes, I should have predicted that):

http://www.ons.gov.uk/ons/rel/crime-stats/crime-statistics/focus-on-violent-crime-and-sexual-offences–2012-13/rft-table-2.xls

New technology appears all the time, but it seemed to me that some very serious problems were being under-addressed, such as rape and sexual assault. Technology obviously won’t solve them alone, but I believe it could help to some degree. However, I wanted to understand the magnitude of the problem first, so sought out the official statistics. I found it intensely frustrating task that left me angry that government is so bad at collecting proper data. So although I started this as another technology blog, it evolved and I now also discuss the statistics too, since poor quality data collection and communication on such an important issue as rape is a huge problem in itself. That isn’t a technology issue, it is one of government competence.

Anyway, the headline stats are that:

1060 rapes of women and 522 rapes of girls under 16 resulted in court convictions. A third as many attempted rapes also resulted in convictions.

14767 reports of rapes or attempted rapes (typically 25%) of females were initially recorded by the police, of which 33% were against girls under 16.

The Crime Survey for England and Wales estimates that 69000 women claim to have been subjected to rape or attempted rape.

I will discuss the stats further after I have considered how technology could help to reduce rape, the original point of the blog.

This is a highly sensitive area, and people get very upset with any discussion of rape because of its huge emotional impact. I don’t want to upset anybody by misplacing blame so let me say very clearly:

Rape or sexual assault are never a victim’s fault. There are no circumstances under which it is acceptable to take part in any sexual act with anyone against their will. If someone does so, it is entirely their fault, not the victim’s. People should not have to protect themselves but should be free to do as they wish without fear of being raped or sexually assaulted. Some people clearly don’t respect that right and rapes and sexual assaults happen. The rest of us want fewer people to be raped or assaulted and want more guilty people to be convicted. Technology can’t stop rape, and I won’t suggest that it can, but if it can help reduce someone’s chances of becoming a victim or help convict a culprit, even in just some cases, that’s progress. I just want to do my bit to help as an engineer. Please don’t just think up reasons why a particular solution is no use in a particular case, think instead how it might help in a few. There are lots of rapes and assaults where nothing I suggest will be of any help at all. Technology can only ever be a small part of our fight against sex crime.

Let’s start with something we could easily do tomorrow, using social networking technology to alert potential victims to some dangers, deter stranger rape or help catch culprits. People encounter strangers all the time – at work, on transport, in clubs, pubs, coffee bars, shops, as well as dark alleys and tow-paths. In many of these places, we expect IT infrastructure, communications, cameras, and people with smartphones.

Social networks often use location and some apps know who some of the people near you are. Shops are starting to use face recognition to identify regular customers and known troublemakers. Videos from building cameras are already often used to try to identify potential suspects or track their movements. Suppose in the not-very-far future, a critical mass of people carried devices that recorded the data of who was near them, throughout the day, and sent it regularly into the cloud. That device could be a special purpose device or it could just be a smartphone with an app on it. Suppose a potential victim in a club has one. They might be able to glance at an app and see a social reputation for many of the people there. They’d see that some are universally considered to be fine upstanding members of the community, even by previous partners, who thought they were nice people, just not right for them. They might see that a few others have had relationships where one or more of their previous partners had left negative feedback, which may or may not be justified. The potential victim might reasonably be more careful with the ones that have dodgy reputations, whether they’re justified or not, and even a little wary of those who don’t carry such a device. Why don’t they carry one? Surely if they were OK, they would? That’s what critical mass does. Above a certain level of adoption, it would rapidly become the norm. Like any sort of reputation, giving someone a false or unjustified rating would carry its own penalty. If you try to get back at an ex by telling lies about them, you’d quickly be identified as a liar by others, or they might sue you for libel. Even at this level, social networking can help alert some people to potential danger some of the time.

Suppose someone ends up being raped. Thanks to the collection of that data by their device (and those of others) of who was where, when, with whom, the police would more easily be able to identify some of the people the victim had encountered and some of them would be able to identify some of the others who didn’t carry such a device. The data would also help eliminate a lot of potential suspects too. Unless a rapist had planned in advance to rape, they may even have such a device with them. That might itself be a deterrent from later raping someone they’d met, because they’d know the police would be able to find them easier. Some clubs and pubs might make it compulsory to carry one, to capitalise on the market from being known as relatively safe hangouts. Other clubs and pubs might be forced to follow suit. We could end up with a society where most of the time, potential rapists would know that their proximity to their potential victim would be known most of the time. So they might behave.

So even social networking such as we have today or could easily produce tomorrow is capable of acting as a deterrent to some people considering raping a stranger. It increases their chances of being caught, and provides some circumstantial evidence at least of their relevant movements when they are.

Smartphones are very underused as a tool to deter rape. Frequent use of social nets such as uploading photos or adding a diary entry into Facebook helps to make a picture of events leading up to a crime that may later help in inquiries. Again, that automatically creates a small deterrence by increasing the chances of being investigated. It could go a lot further though. Life-logging may use a microphone that records a continuous audio all day and a camera that records pictures when the scene changes. This already exists but is not in common use yet – frequent Facebook updates are as far as most people currently get to life-logging. Almost any phone is capable of recording audio, and can easily do so from a pocket or bag, but if a camera is to record frequent images, it really needs to be worn. That may be OK in several years if we’re all wearing video visors with built-in cameras, but in practice and for the short-term, we’re realistically stuck with just the audio.

So life-logging technology could record a lot of the events, audio and pictures leading up to an offense, and any smartphone could do at least some of this. A rapist might forcefully search and remove such devices from a victim or their bag, but by then they might already have transmitted a lot of data into the cloud, possibly even evidence of a struggle that may be used later to help convict. If not removed, it could even record audio throughout the offence, providing a good source of evidence. Smartphones also have accelerometers in them, so they could even act as a sort of black box, showing when a victim was still, walking, running, or struggling. Further, phones often have tracking apps on them, so if a rapist did steal a phone, it may show their later movements up to the point where they dumped it. Phones can also be used to issue distress calls. An emergency distress button would be easy to implement, and could transmit exact location stream audio to the emergency services. An app could also be set up to issue a distress call automatically under specific circumstances, such at it detecting a struggle or a scream or a call for help. Finally, a lot of phones are equipped for ID purposes, and that will generally increase the proportion of people in a building whose identity is known. Someone who habitually uses their phone for such purposes could be asked to justify disabling ID or tracking services when later interviewed in connection with an offense. All of these developments will make it just a little bit harder to escape justice and that knowledge would act as a deterrent.

Overall, a smart phone, with its accelerometer, positioning, audio, image and video recording and its ability to record and transmit any such data on to cloud storage makes it into a potentially very useful black box and that surely must be a significant deterrent. From the point of view of someone falsely accused, it also could act as a valuable proof of innocence if they can show that the whole time they were together was amicable, or if indeed they were somewhere else altogether at the time. So actually, both sides of a date have an interest in using such black box smartphone technology and on a date with someone new, a sensible precautionary habit could be encouraged to enable continuous black box logging throughout a date. People might reasonably object to having a continuous recording happening during a legitimate date if they thought there was a danger it could be used by the other person to entertain their friends or uploaded on to the web later, but it could easily be implemented to protect privacy and avoiding the risk of misuse. That could be achieved by using an app that keeps the record on a database but gives nobody access to it without a court order. It would be hard to find a good reason to object to the other person protecting themselves by using such an app. With such protection and extra protection, perhaps it could become as much part of safe sex as using a condom. Imagine if women’s groups were to encourage a trend to make this sort of recording on dates the norm – no app, no fun!

These technologies would be useful primarily in deterring stranger rape or date rape. I doubt if they would help as much with rapes that are by someone the victim knows. There are a number of reasons. It’s reasonable to assume that when the victim knows the rapist, and especially if they are partners and have regular sex, it is far less likely that either would have a recording going. For example, a woman may change her mind during sex that started off consensually. If the man forces her to continue, it is very unlikely that there would be anything recorded to prove rape occurred. In an abusive or violent relationship, an abused partner might use an audio recording via a hidden device when they are concerned – an app could initiate a recording on detection of a secret keyword, or when voices are raised, even when the phone is put in a particular location or orientation. So it might be easy to hide the fact that a recording is going and it could be useful in some cases. However, the fear of being caught doing so by a violent partner might be a strong deterrent, and an abuser may well have full access to or even control of their partner’s phone, and most of all, a victim generally doesn’t know they are going to be raped. So the phone probably isn’t a very useful factor when the victim and rapist are partners or are often together in that kind of situation. However, when it is two colleagues or friends in a new kind of situation, which also accounts for a significant proportion of rapes, perhaps it is more appropriate and normal dating protocols for black box app use may more often apply. Companies could help protect employees by insisting that such a black box recording is in force when any employees are together, in or out of office hours. They could even automate it by detecting proximity of their employees’ phones.

The smartphone is already ubiquitous and everyone is familiar with installing and using apps, so any of this could be done right away. A good campaign supported by the right groups could ensure good uptake of such apps very quickly. And it needn’t be all phone-centric. A new class of device would be useful for those who feel threatened in abusive relationships. Thanks to miniaturisation, recording and transmission devices can easily be concealed in just about any everyday object, many that would be common in a handbag or bedroom drawer or on a bedside table. If abuse isn’t just a one-off event, they may offer a valuable means of providing evidence to deal with an abusive partner.

Obviously, black boxes or audio recording can’t stop someone from using force or threats, but it can provide good quality evidence, and the deterrent effect of likely being caught is a strong defence against any kind of crime. I think that is probably as far as technology can go. Self-defense weapons such as pepper sprays and rape alarms already exist, but we don’t allow use of tasers or knives or guns and similar restrictions would apply to future defence technologies. Automatically raising an alarm and getting help to the scene quickly is the only way we can reasonably expect technology to help deal with a rape that is occurring, but that makes the use of deterrence via probably detection all the more valuable. Since the technologies also help protect the innocent against false accusations, that would help in getting their social adoption.

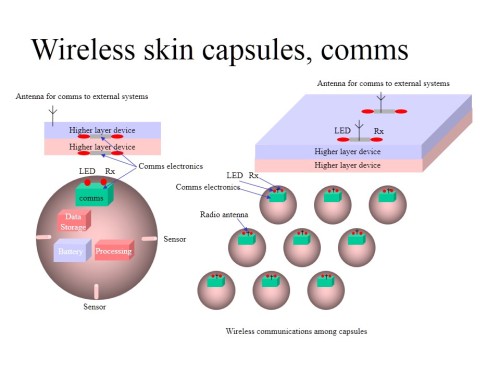

So much for what we could do with existing technology. In a few years, we will become accustomed to having patches of electronics stuck on our skin. Active skin and even active makeup will have a lot of medical functions, but it could also include accelerometers, recording devices, pressure sensors and just about anything that uses electronics. Any part of the body can be printed with active skin or active makeup, which is then potentially part of this black box system. Invisibly small sensors in makeup, on thin membranes or even embedded among skin cells could notionally detect, measure and record any kiss, caress, squeeze or impact, even record the physical sensations experiences by recording the nerve signals. It could record pain or discomfort, along with precise timing, location, and measure many properties of the skin touching or kissing it too. It might be possible for a victim to prove exactly when a rape happened, exactly what it involved, and who was responsible. Such technology is already being researched around the world. It will take a while to develop and become widespread, but it will come.

I don’t want this to sound frivolous, but I suggested many years ago that when women get breast implants, they really ought to have at least some of the space used for useful electronics, and electronics can actually be made using silicone. A potential rapist can’t steal or deactivate a smart breast implant as easily as a phone. If a woman is going to get implants anyway, why not get ones that increase her safety by having some sort of built-in black box? We don’t have to wait a decade for the technology to do that.

The statistics show that many rapes and sexual assaults that are reported don’t result in a conviction. Some accusations may be false, and I couldn’t find any figures for that number, but lack of good evidence is one of the biggest reasons why many genuine rapes don’t result in conviction. Technology can’t stop rapes, but it can certainly help a lot to provide good quality evidence to make convictions more likely when rapes and assaults do occur.

By making people more aware of potentially risky dates, and by gathering continuous data streams when they are with someone, technology can provide an extra level of safety and a good deterrent against rape and sexual assault. That in no way implies that rape is anyone’s fault except the rapist, but with high social support, it could help make a significant drop in rape incidence and a large rise in conviction rates. I am aware that in the biggest category, the technology I suggest has the smallest benefit to offer, so we will still need to tackle rape by other means. It is only a start, but better some reduction than none.

The rest of this blog is about rape statistics, not about technology or the future. It may be of interest to some readers. Its overwhelming conclusion is that official stats are a mess and nobody has a clue how many rapes actually take place.

Summary Statistics

We hear politicians and special interest groups citing and sometimes misrepresenting wildly varying statistics all the time, and now I know why. It’s hard to know the true scale of the problem, and very easy indeed to be confused by poor presentation of poor quality government statistics in the sexual offenses category. That is a huge issue and source of problems in itself. Although it is very much on the furthest edge of my normal brief, I spent three days trawling through the whole sexual offenses field, looking at the crime survey questionnaires, the gaping holes and inconsistencies in collected data, and the evolution of offense categories over the last decade. It is no wonder government policies and public debate are so confused when the data available is so poor. It very badly needs fixed.

There are several stages at which some data is available outside and within the justice system. The level of credibility of a claim obviously varies at each stage as the level of evidence increases.

Outside of the justice system, someone may claim to have been raped in a self-completion module of The Crime Survey for England and Wales (CSEW), knowing that it is anonymous, nobody will query their response, no further verification will be required and there will be no consequences for anyone. There are strong personal and political reasons why people may be motivated to give false information in a survey designed to measure crime levels (in either direction), especially in those sections not done by face to face interview, and these reasons are magnified when people filling it in know that their answers will be scaled up to represent the whole population, so that already introduces a large motivational error source. However, even for a person fully intending to tell the truth in the survey, some questions are ambiguous or biased, and some are highly specific while others leave far too much scope for interpretation, leaving gaps in some areas while obsessing with others. In my view, the CSEW is badly conceived and badly implemented. In spite of unfounded government and police assurances that it gives a more accurate picture of crime than other sources, having read it, I have little more confidence in the Crime Survey for England and Wales (CSEW) as an indicator of actual crime levels than a casual conversation in a pub. We can be sure that some people don’t report some rapes for a variety of reasons and that in itself is a cause for concern. We don’t know how many go unreported, and the CSEW is not a reasonable indicator. We need a more reliable source.

The next stage for potential stats is that anyone may report any rape to the police, whether of themselves, a friend or colleague, witnessing a rape of a stranger, or even something they heard. The police will only record some of these initial reports as crimes, on a fairly common sense approach. According to the report, ‘the police record a crime if, on the balance of probability, the circumstances as reported amount to a crime defined by law and if there is no credible evidence to the contrary‘. 7% of these are later dropped for reasons such as errors in initial recording or retraction. However, it has recently been revealed that some forces record every crime reported whereas others record it only after it has passed the assessment above, damaging the quality of the data by mixing two different types of data together. In such an important area of crime, it is most unsatisfactory that proper statistics are not gathered in a consistent way for each stage of the criminal justice process, using the same criteria in every force.

Having recorded crimes, the police will proceed some of them through the criminal justice system.

Finally, the courts will find proven guilt in some of those cases.

I looked for the data for each of these stages, expecting to find vast numbers of table detailing everything. Perhaps they exist, and I certainly followed a number of promising routes, but most of the roads I followed ended up leading back to the CSEW and the same overview report. This joint overview report for the UK was produced by the Ministry of Justice, Home Office and the Office for National Statistics in 2013, and it includes a range of tables with selected data from actual convictions through to results of the crime survey of England and Wales. While useful, it omits a lot of essential data that I couldn’t find anywhere else either.

The report and its tables can be accessed from:

http://www.ons.gov.uk/ons/rel/crime-stats/an-overview-of-sexual-offending-in-england—wales/december-2012/index.html

Another site gives a nice infographic on police recording, although for a different period. It is worth looking at if only to see the wonderful caveat: ‘the police figures exclude those offences which have not been reported to them’. Here it is:

http://www.ons.gov.uk/ons/rel/crime-stats/crime-statistics/period-ending-june-2013/info-sexual-offenses.html

In my view the ‘overview of sexual offending’ report mixes different qualities of data for different crimes and different victim groups in such a way as to invite confusion, distortion and misrepresentation. I’d encourage you to read it yourself if only to convince you of the need to pressure government to do it properly. Be warned, a great deal of care is required to work out exactly what and which victim group each refers to. Some figures include all people, some only females, some only women 16-59 years old. Some refer to different crime groups with similar sounding names such as sexual assault and sexual offence, some include attempts whereas others don’t. Worst of all, some very important statistics are missing, and it’s easy to assume another one refers to what you are looking for when on closer inspection, it doesn’t. However, there doesn’t appear to be a better official report available, so I had to use it. I’ve done my best to extract and qualify the headline statistics.

Taking rapes against both males and females, in 2011, 1153 people were convicted of carrying out 2294 rapes or attempted rapes, an average of 2 each. The conviction rate was 34.6% of 6630 proceeded against, from 16041 rapes or attempted rapes recorded by the police. Inexplicably, conviction figures are not broken down by victim gender, nor by rape or attempted rape.

Police recording stats are broken down well. Of the 16041, 1274 (8%) of the rapes and attempted rapes recorded by the police were against males, while 14767 (92%) were against females. 33% of the female rapes recorded and 70% of male rapes recorded were against children (though far more girls were raped than boys). Figures are also broken down well against ethnicity and age, for offender and victim. Figures elsewhere suggested that 25% of rape attempts are unsuccessful, which combined with the 92% proportion that were rapes of females would indicate 1582 convictions for actual rape of a female, approximately 1060 women and 522 girls, but those figures only hold true if the proportions are similar through to conviction.

Surely such a report should clearly state such an important figure as the number of rapes of a female that led to a conviction, and not leave it to readers to calculate their own estimate from pieces of data spread throughout the report. Government needs to do a lot better at gathering, categorising, analysing and reporting clear and accurate data.

That 1582 figure for convictions is important, but it represents only the figure for rapes proven beyond reasonable doubt. Some females were raped and the culprit went unpunished. There has been a lot of recent effort to try to get a better conviction rate for rapes. Getting better evidence more frequently would certainly help get more convictions. A common perception is that many or even most rapes are unreported so the focus is often on trying to get more women to report it when they are raped. If someone knows they have good evidence, they are more likely to report a rape or assault, since one of the main reasons they don’t report it is lack of confidence that the police can do anything.

Although I don’t have much confidence in the figures from the CSEW, I’ll list them anyway. Perhaps you have greater confidence in them. The CSEW uses a sample of people, and then results are scaled up to a representation of the whole population. The CSEW (Crime Survey of England and Wales) estimates that 52000 (95% confidence level of between 39000 and 66000) women between 16 and 59 years old claim to have been victim of actual rape in the last 12 months, based on anonymous self-completion questionnaires, with 69000 (95% confidence level of between 54000 and 85000) women claiming to have been victim of attempted or actual rape in the last 12 months.

In the same period, 22053 sexual assaults were recorded by the police. I couldn’t find any figures for convictions for sexual assaults, only for sexual offenses, which is a different, far larger category that includes indecent exposure and voyeurism. It isn’t clear why the report doesn’t include the figures for sexual assault convictions. Again, government should do better in their collection and presentation of important statistics.

The overview report also gives the stats for the number of women who said they reported a rape or attempted rape. 15% of women said they told the police, 57% said they told someone else but not the police, and 28% said they told nobody. The report does give the reasons commonly cited for not telling the police: “Based on the responses of female victims in the 2011/12 survey, the most frequently cited were that it would be ‘embarrassing’, they ‘didn’t think the police could do much to help’, that the incident was ‘too trivial/not worth reporting’, or that they saw it as a ‘private/family matter and not police business’.”

Whether you pick the 2110 convictions of rape or attempted rape against a female or the 69000 claimed in anonymous questionnaires, or anywhere in between, a lot of females are being subjected to actual and attempted rapes, and a lot victim of sexual assault. The high proportion of victims that are young children is especially alarming. Male rape is a big problem too, but the figures are a lot lower than for female rape.