I’ve done patches of work on this topic frequently over the last 20 years. It usually features in my books at some point too, but it’s always good to look afresh at anything. Sometimes you see something you didn’t see last time.

Some of the potential future is pretty obvious. I use the word potential, because there are usually choices to be made, regulations that may or may not get in the way, or many other reasons we could divert from the main road or even get blocked completely.

We’ve been learning genetics now for a long time, with a few key breakthroughs. It is certain that our understanding will increase, less certain how far people will be permitted to exploit the potential here in any given time frame. But let’s take a good example to learn a key message first. In IVF, we can filter out embryos that have the ‘wrong’ genes, and use their sibling embryos instead. Few people have a problem with that. At the same time, pregnant women may choose an abortion if they don’t want a child when they discover it is the wrong gender, but in the UK at least, that is illegal. The moral and ethical values of our society are on a random walk though, changing direction frequently. The social assignment of right and wrong can reverse completely in just 30 years. In this example, we saw a complete reversal of attitudes to abortion itself within 30 years, so who is to say we won’t see reversal on the attitude to abortion due to gender? It is unwise to expect that future generations will have the same value sets. In fact, it is highly unlikely that they will.

That lesson likely applies to many technology developments and quite a lot of social ones – such as euthanasia and assisted suicide, both already well into their attitude reversal. At some point, even if something is distasteful to current attitudes, it is pretty likely to be legalized eventually, and hard to ban once the door is opened. There will always be another special case that opens the door a little further. So we should assume that we may eventually use genetics to its full capability, even if it is temporarily blocked for a few decades along the way. The same goes for other biotech, nanotech, IT, AI and any other transhuman enhancements that might come down the road.

So, where can we go in the future? What sorts of splits can we expect in the future human evolution path? It certainly won’t remain as just plain old homo sapiens.

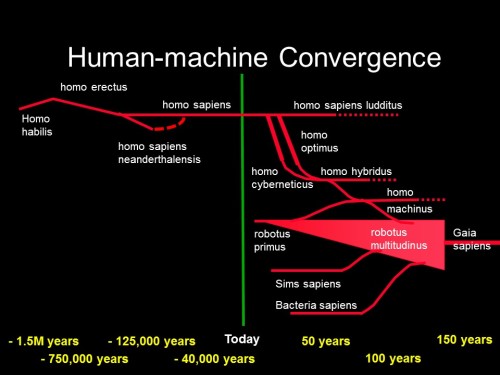

I drew this evolution path a long time ago in the mid 1990s:

It was clear even then that we could connect external IT to the nervous system, eventually the brain, and this would lead to IT-enhanced senses, memory, processing, higher intelligence, hence homo cyberneticus. (No point in having had to suffer Latin at school if you aren’t allowed to get your own back on it later). Meanwhile, genetic enhancement and optimization of selected features would lead to homo optimus. Converging these two – why should you have to choose, why not have a perfect body and an enhanced mind? – you get homo hybridus. Meanwhile, in the robots and AI world, machine intelligence is increasing and we eventually we get the first self-aware AI/robot (it makes little sense to separate the two since networked AI can easily be connected to a machine such as a robot) and this has its own evolution path towards a rich diversity of different kinds of AI and robots, robotus multitudinus. Since both the AI world and the human world could be networked to the same network, it is then easy to see how they could converge, to give homo machinus. This future transhuman would have any of the abilities of humans and machines at its disposal. and eventually the ability to network minds into a shared consciousness. A lot of ordinary conventional humans would remain, but with safe upgrades available, I called them homo sapiens ludditus. As they watch their neighbors getting all the best jobs, winning at all the sports, buying everything, and getting the hottest dates too, many would be tempted to accept the upgrades and homo sapiens might gradually fizzle out.

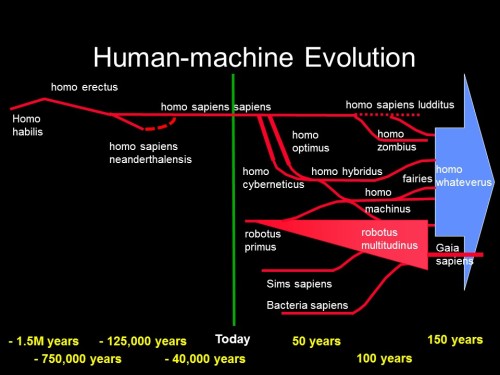

My future evolution timeline stayed like that for several years. Then in the early 2000s I updated it to include later ideas:

I realized that we could still add AI into computer games long after it becomes comparable with human intelligence, so games like EA’s The Sims might evolve to allow entire civilizations living within a computer game, each aware of their existence, each running just as real a life as you and I. It is perhaps unlikely that we would allow children any time soon to control fully sentient people within a computer game, acting as some sort of a god to them, but who knows, future people will argue that they’re not really real people so it’s OK. Anyway, you could employ them in the game to do real knowledge work, and make money, like slaves. But since you’re nice, you might do an incentive program for them that lets them buy their freedom if they do well, letting them migrate into an android. They could even carry on living in their Sims home and still wander round in our world too.

Emigration from computer games into our world could be high, but the reverse is also possible. If the mind is connected well enough, and enhanced so far by external IT that almost all of it runs on the IT instead of in the brain, then when your body dies, your mind would carry on living. It could live in any world, real or fantasy, or move freely between them. (As I explained in my last blog, it would also be able to travel in time, subject to certain very expensive infrastructural requirements.) As well as migrants coming via electronic immortality route, it would be likely that some people that are unhappy in the real world might prefer to end it all and migrate their minds into a virtual world where they might be happy. As an alternative to suicide, I can imagine that would be a popular route. If they feel better later, they could even come back, using an android. So we’d have an interesting future with lots of variants of people, AI and computer game and fantasy characters migrating among various real and imaginary worlds.

But it doesn’t stop there. Meanwhile, back in the biotech labs, progress is continuing to harness bacteria to make components of electronic circuits (after which the bacteria are dissolved to leave the electronics). Bacteria can also have genes added to emit light or electrical signals. They could later be enhanced so that as well as being able to fabricate electronic components, they could power them too. We might add various other features too, but eventually, we’re likely to end up with bacteria that contain electronics and can connect to other bacteria nearby that contain other electronics to make sophisticated circuits. We could obviously harness self-assembly and self-organisation, which are also progressing nicely. The result is that we will get smart bacteria, collectively making sophisticated, intelligent, conscious entities of a wide variety, with lots of sensory capability distributed over a wide range. Bacteria Sapiens.

I often talk about smart yogurt using such an approach as a key future computing solution. If it were to stay in a yogurt pot, it would be easy to control. But it won’t. A collective bacterial intelligence such as this could gain a global presence, and could exist in land, sea and air, maybe even in space. Allowing lots of different biological properties could allow colonization of every niche. In fact, the first few generations of bacteria sapiens might be smart enough to design their own offspring. They could probably buy or gain access to equipment to fabricate them and release them to multiply. It might be impossible for humans to stop this once it gets to a certain point. Accidents happen, as do rogue regimes, terrorism and general mad-scientist type mischief.

And meanwhile, we’ll also be modifying nature. We’ll be genetically enhancing a wide range of organisms, bringing some back from extinction, creating new ones, adding new features, changing even some of the basic mechanism by which nature works in some cases. We might even create new kinds of DNA or develop substitutes with enhanced capability. We may change nature’s evolution hugely. With a mix of old and new and modified, nature evolves nicely into Gaia Sapiens.

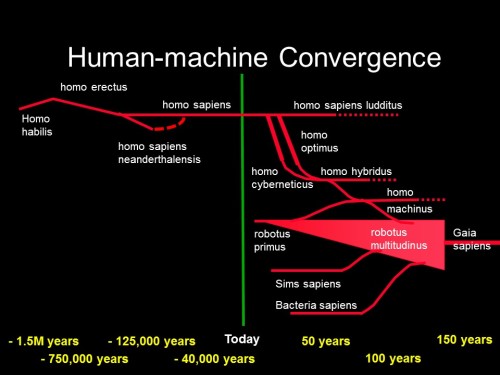

We’re not finished with the evolution chart though. Here is the next one:

Just one thing is added. Homo zombius. I realized eventually that the sci-fi ideas of zombies being created by viruses could be entirely feasible. A few viruses, bacteria and other parasites can affect the brains of the victims and change their behaviour to harness them for their own life cycle.

See http://io9.com/12-real-parasites-that-control-the-lives-of-their-hosts-461313366 for fun.

Bacteria sapiens could be highly versatile. It could make virus variants if need be. It could evolve itself to be able to live in our bodies, maybe penetrate our brains. Bacteria sapiens could make tiny components that connect to brain cells and intercept signals within our brains, or put signals back in. It could read our thoughts, and then control our thoughts. It could essentially convert people into remote controlled robots, or zombies as we usually call them. They could even control muscles directly to a point, so even if the zombie is decapitated, it could carry on for a short while. I used that as part of my storyline in Space Anchor. If future humans have widespread availability of cordless electricity, as they might, then it is far fetched but possible that headless zombies could wander around for ages, using the bacterial sensors to navigate. Homo zombius would be mankind enslaved by bacteria. Hopefully just a few people, but it could be everyone if we lose the battle. Think how difficult a war against bacteria would be, especially if they can penetrate anyone’s brain and intercept thoughts. The Terminator films looks a lot less scary when you compare the Terminator with the real potential of smart yogurt.

Bacteria sapiens might also need to be consulted when humans plan any transhuman upgrades. If they don’t consent, we might not be able to do other transhuman stuff. Transhumans might only be possible if transbacteria allow it.

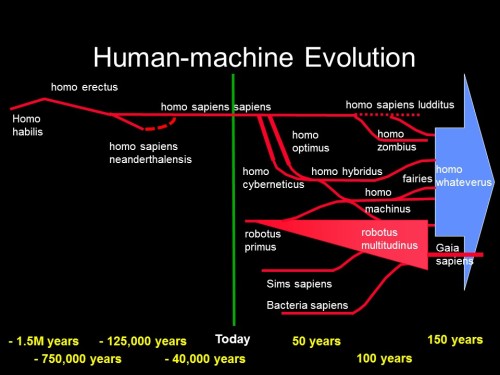

Not done yet. I wrote a couple of weeks ago about fairies. I suggested fairies are entirely feasible future variants that would be ideally suited to space travel.

Fairies will dominate space travel

They’d also have lots of environmental advantages as well as most other things from the transhuman library. So I think they’re inevitable. So we should add fairies to the future timeline. We need a revised timeline and they certainly deserve their own branch. But I haven’t drawn it yet, hence this blog as an excuse. Before I do and finish this, what else needs to go on it?

Well, time travel in cyberspace is feasible and attractive beyond 2075. It’s not the proper real world time travel that isn’t permitted by physics, but it could feel just like that to those involved, and it could go further than you might think. It certainly will have some effects in the real world, because some of the active members of the society beyond 2075 might be involved in it. It certainly changes the future evolution timeline if people can essentially migrate from one era to another (there are some very strong caveats applicable here that I tried to explain in the blog, so please don’t misquote me as a nutter – I haven’t forgotten basic physics and logic, I’m just suggesting a feasible implementation of cyberspace that would allow time travel within it. It is really a cyberspace bubble that intersects with the real world at the real time front so doesn’t cause any physics problems, but at that intersection, its users can interact fully with the real world and their cultural experiences of time travel are therefore significant to others outside it.)

What else? OK, well there is a very significant community (many millions of people) that engages in all sorts of fantasy in shared on-line worlds, chat rooms and other forums. Fairies, elves, assorted spirits, assorted gods, dwarves, vampires, werewolves, assorted furry animals, assorted aliens, dolls, living statues, mannequins, remote controlled people, assorted inanimate but living objects, plants and of course assorted robot/android variants are just some of those that already exist in principle; I’m sure I’ve forgotten some here and anyway, many more are invented every year so an exhaustive list would quickly become out of date. In most cases, many people already role play these with a great deal of conviction and imagination, not just in standalone games, but in communities, with rich cultures, back-stories and story-lines. So we know there is a strong demand, so we’re only waiting for their implementation once technology catches up, and it certainly will.

Biotech can do a lot, and nanotech and IT can add greatly to that. If you can design any kind of body with almost any kind of properties and constraints and abilities, and add any kind of IT and sensing and networking and sharing and external links for control and access and duplication, we will have an extremely rich diversity of future forms with an infinite variety of subcultures, cross-fertilization, migration and transformation. In fact, I can’t add just a few branches to my timeline. I need millions. So instead I will just lump all these extras into a huge collected category that allows almost anything, called Homo Whateverus.

So, here is the future of human (and associates) evolution, for the next 150 years. A few possible cross-links are omitted for clarity

I won’t be around to watch it all happen. But a lot of you will.