Some things are getting better, some worse. 2050 will be neither dystopian nor utopian. A balance of good and bad not unlike today, but with different goods and bads, and slightly better overall. More detail? Okay, for most of my followers, this will mostly collate things you may know already, but there’s no harm in a refresher Futures 101.

Health

We will have cost-effective and widespread cures or control for most cancers, heart disease, diabetes, dementia and most other killers. Quality-of-life diseases such as arthritis will also be controllable or curable. People will live longer and remain healthier for longer, with an accelerated decline at the end.

On the bad side, new diseases will exist, including mutated antibiotic-resistant versions of existing ones. There will still be occasional natural flu mutations and other viruses, and there will still be others arising from contacts between people and other animals that are more easily spread due to increased population, urbanization and better mobility. Some previously rare diseases will become big problems due to urbanization and mobility. Urbanization will be a challenge.

However, diagnostics will be faster and better, we will no longer be so reliant on antibiotics to fight back, and sterilisation techniques for hospitals will be much improved. So even with greater challenges, we will be able to cope fine most of the time with occasional headlines from epidemics.

A darker side is the increasing prospect for bio-terrorism, with man-made viruses deliberately designed to be highly lethal, very contagious and to withstand most conventional defenses, optimized for maximum and rapid spread by harnessing mobility and urbanization. With pretty good control or defense against most natural threats, this may well be the biggest cause of mass deaths in 2050. Bio-warfare is far less likely.

Utilizing other techs, these bio-terrorist viruses could be deployed by swarms of tiny drones that would be hard to spot until too late, and of course these could also be used with chemical weapons such as use of nerve gas. Another tech-based health threat is nanotechnology devices designed to invade the body, damage of destroy systems or even control the brain. It is easy to detect and shoot down macro-scale deployment weapons such as missiles or large drones but far harder to defend against tiny devices such as midge-sized drones or nanotech devices.

The overall conclusion on health is that people will mostly experience much improved lives with good health, long life and a rapid end. A relatively few (but very conspicuous) people will fall victim to terrorist attacks, made far more feasible and effective by changing technology and demographics.

Loneliness

An often-overlooked benefit of increasing longevity is the extending multi-generational family. It will be commonplace to have great grandparents and great-great grandparents. With improved health until near their end, these older people will be seen more as welcome and less as a burden. This advantage will be partly offset by increasing global mobility, so families are more likely to be geographically dispersed.

Not everyone will have close family to enjoy and to support them. Loneliness is increasing even as we get busier, fuller lives. Social inclusion depends on a number of factors, and some of those at least will improve. Public transport that depends on an elderly person walking 15 minutes to a bus stop where they have to wait ages in the rain and wind for a bus on which they are very likely to catch a disease from another passenger is really not fit for purpose. Such primitive and unsuitable systems will be replaced in the next decades by far more socially inclusive self-driving cars. Fleets of these will replace buses and taxis. They will pick people up from their homes and take them all the way to where they need to go, then take them home when needed. As well as being very low cost and very environmentally friendly, they will also have almost zero accident rates and provide fast journey times thanks to very low congestion. Best of all, they will bring easier social inclusion to everyone by removing the barriers of difficult, slow, expensive and tedious journeys. It will be far easier for a lonely person to get out and enjoy cultural activity with other people.

More intuitive social networking, coupled to augmented and virtual reality environments in which to socialize will also mean easier contact even without going anywhere. AI will be better at finding suitable companions and lovers for those who need assistance.

Even so, some people will not benefit and will remain lonely due to other factors such as poor mental health, lack of social skills, or geographic isolation. They still do not need to be alone. 2050 will also feature large numbers of robots and AIs, and although these might not be quite so valuable to some as other human contact, they will be a pretty good substitute. Although many will be functional, cheap and simply fit for purpose, those designed for companionship or home support functions will very probably look human and behave human. They will have good intellectual and emotional skills and will be able to act as a very smart executive assistant as well as domestic servant and as a personal doctor and nurse, even as a sex partner if needed.

It would be too optimistic to say we will eradicate loneliness by 2050 but we can certainly make a big dent in it.

Poverty

Technology progress will greatly increase the size of the global economy. Even with the odd recession our children will be far richer than our parents. It is reasonable to expect the total economy to be 2.5 times bigger than today’s by 2050. That just assumes an average growth of about 2.5% which I think is a reasonable estimate given that technology benefits are accelerating rather than slowing even in spite of recent recession.

While we define poverty level as a percentage of average income, we can guarantee poverty will remain even if everyone lived like royalty. If average income were a million dollars per year, 60% of that would make you rich by any sensible definition but would still qualify as poverty by the ludicrous definition based on relative income used in the UK and some other countries. At some point we need to stop calling people poor if they can afford healthy food, pay everyday bills, buy decent clothes, have a decent roof over their heads and have an occasional holiday. With the global economy improving so much and so fast, and with people having far better access to markets via networks, it will be far easier for people everywhere to earn enough to live comfortably.

In most countries, welfare will be able to provide for those who can’t easily look after themselves at a decent level. Ongoing progress of globalization of compassion that we see today will likely make a global welfare net by 2050. Everyone won’t be rich, and some won’t even be very comfortable, but I believe absolute poverty will be eliminated in most countries, and we can ensure that it will be possible for most people to live in dignity. I think the means, motive and opportunity will make that happen, but it won’t reach everyone. Some people will live under dysfunctional governments that prevent their people having access to support that would otherwise be available to them. Hopefully not many. Absolute poverty by 2050 won’t be history but it will be rare.

In most developed countries, the more generous welfare net might extend to providing a ‘citizen wage’ for everyone, and the level of that could be the same as average wage is today. No-one need be poor in 2050.

Environment

The environment will be in good shape in 2050. I have no sympathy with doom mongers who predict otherwise. As our wealth increases, we tend to look after the environment better. As technology improves, we will achieve a far higher standards of living while looking after the environment. Better mining techniques will allow more reserves to become economic, we will need less resource to do the same job better, reuse and recycling will make more use of the same material.

Short term nightmares such as China’s urban pollution levels will be history by 2050. Energy supply is one of the big contributors to pollution today, but by 2050, combinations of shale gas, nuclear energy (uranium and thorium), fusion and solar energy will make up the vast bulk of energy supply. Oil and unprocessed coal will mostly be left in the ground, though bacterial conversion of coal into gas may well be used. Oil that isn’t extracted by 2030 will be left there, too expensive compared to making the equivalent energy by other means. Conventional nuclear energy will also be on its way to being phased out due to cost. Energy from fusion will only be starting to come on stream everywhere but solar energy will be cheap to harvest and high-tech cabling will enable its easier distribution from sunny areas to where it is needed.

It isn’t too much to expect of future governments that they should be able to negotiate that energy should be grown in deserts, and food crops grown on fertile land. We should not use fertile land to place solar panels, nor should we grow crops to convert to bio-fuel when there is plenty of sunny desert of little value otherwise on which to place solar panels.

With proper stewardship of agricultural land, together with various other food production technologies such as hydroponics, vertical farms and a lot of meat production via tissue culturing, there will be more food per capita than today even with a larger global population. In fact, with a surplus of agricultural land, some might well be returned to nature.

In forests and other ecosystems, technology will also help enormously in monitoring eco-health, and technologies such as genetic modification might be used to improve viability of some specie otherwise threatened.

Anyone who reads my blog regularly will know that I don’t believe climate change is a significant problem in the 2050 time frame, or even this century. I won’t waste any more words on it here. In fact, if I have to say anything, it is that global cooling is more likely to be a problem than warming.

Food and Water

As I just mentioned in the environment section, we will likely use deserts for energy supply and fertile land for crops. Improving efficiency and density will ensure there is far more capability to produce food than we need. Many people will still eat meat, but some at least will be produced in factories using processes such as tissue culturing. Meat pastes with assorted textures can then be used to create a variety of forms of processed meats. That might even happen in home kitchens using 3D printer technology.

Water supply has often been predicted by futurists as a cause of future wars, but I disagree. I think that progress in desalination is likely to be very rapid now, especially with new materials such as graphene likely to come on stream in bulk. With easy and cheap desalination, water supply should be adequate everywhere and although there may be arguments over rivers I don’t think the pressures are sufficient by themselves to cause wars.

Privacy and Freedom

In 2016, we’re seeing privacy fighting a losing battle for survival. Government increases surveillance ubiquitously and demands more and more access to data on every aspect of our lives, followed by greater control. It invariably cites the desire to control crime and terrorism as the excuse and as they both increase, that excuse will be used until we have very little privacy left. Advancing technology means that by 2050, it will be fully possible to implement thought police to check what we are thinking, planning, desiring and make sure it conforms to what the authorities have decided is appropriate. Even the supposed servant robots that live with us and the AIs in our machines will keep official watch on us and be obliged to report any misdemeanors. Back doors for the authorities will be in everything. Total surveillance obliterates freedom of thought and expression. If you are not free to think or do something wrong, you are not free.

Freedom is strongly linked to privacy. With laws in place and the means to police them in depth, freedom will be limited to what is permitted. Criminals will still find ways to bypass, evade, masquerade, block and destroy and it hard not to believe that criminals will be free to continue doing what they do, while law-abiding citizens will be kept under strict supervision. Criminals will be free while the rest of us live in a digital open prison.

Some say if you don’t want to do wrong, you have nothing to fear. They are deluded fools. With full access to historic electronic records going back to now or earlier, it is not only today’s laws and guidelines that you need to be compliant with but all the future paths of the random walk of political correctness. Social networks can be fiercer police than the police and we are already discovering that having done something in the distant past under different laws and in different cultures is no defense from the social networking mobs. You may be free technically to do or say something today, but if it will be remembered for ever, and it will be, you also need to check that it will probably always be praiseworthy.

I can’t counterbalance this section with any positives. I’ve side before that with all the benefits we can expect, we will end up with no privacy, no freedom and the future will be a gilded cage.

Science and the arts

Yes they do go together. Science shows us how the universe works and how to do what we want. The arts are what we want to do. Both will flourish. AI will help accelerate science across the board, with a singularity actually spread over decades. There will be human knowledge but a great deal more machine knowledge which is beyond un-enhanced human comprehension. However, we will also have the means to connect our minds to the machine world to enhance our senses and intellect, so enhanced human minds will be the norm for many people, and our top scientists and engineers will understand it. In fact, it isn’t safe to develop in any other way.

Science and technology advances will improve sports too, with exoskeletons, safe drugs, active skin training acceleration and virtual reality immersion.

The arts will also flourish. Self-actualization through the arts will make full use of AI assistance. a feeble idea enhanced by and AI assistant can become a work of art, a masterpiece. Whether it be writing or painting, music or philosophy, people will be able to do more, enjoy more, appreciate more, be more. What’s not to like?

Space

by 2050, space will be a massive business in several industries. Space tourism will include short sub-orbital trips right up to lengthy stays in space hotels, and maybe on the moon for the super-rich at least.

Meanwhile asteroid mining will be under way. Some have predicted that this will end resource problems here on Earth, but firstly, there won’t be any resource problems here on Earth, and secondly and most importantly, it will be far too expensive to bring materials back to Earth, and almost all the resources mined will be used in space, to make space stations, vehicles, energy harvesting platforms, factories and so on. Humans will be expanding into space rapidly.

Some of these factories and vehicles and platforms and stations will be used for science, some for tourism, some for military purposes. Many will be used to offer services such as monitoring, positioning, communications just as today but with greater sophistication and detail.

Space will be more militarized too. We can hope that it will not be used in actual war, but I can’t honestly predict that one way or the other.

Migration

If the world around you is increasingly unstable, if people are fighting, if times are very hard and government is oppressive, and if there is a land of milk and honey not far away that you can get to, where you can hope for a much better, more prosperous life, free of tyranny, where instead of being part of the third world, you can be in the rich world, then you may well choose to take the risks and traumas associated with migrating. Increasing population way ahead of increasing wealth in Africa, and a drop in the global need for oil will both increase problems in the Middle East and North Africa. Add to that vicious religious sectarian conflict and a great many people will want to migrate indeed. The pressures on Europe and America to accept several millions more migrants will be intense.

By 2050, these regions will hopefully have ended their squabbles, and some migrants will return to rebuild, but most will remain in their new homes.

Most of these migrants will not assimilate well into their new countries but will mainly form their own communities where they can have a quite separate culture, and they will apply pressure to be allowed to self-govern. A self-impose apartheid will result. It might if we are lucky gradually diffuse as religion gradually becomes less important and the western lifestyle becomes more attractive. However, there is also a reinforcing pressure, with this self-exclusion and geographic isolation resulting in fewer opportunities, less mixing with others and therefore a growing feeling of disadvantage, exclusion and victimization. Tribalism becomes reinforced and opportunities for tension increase. We already see that manifested well in the UK and other European countries.

Meanwhile, much of the world will be prosperous, and there will be many more opportunities for young capable people to migrate and prosper elsewhere. An ageing Europe with too much power held by older people and high taxes to pay for their pensions and care might prove a discouragement to stay, whereas the new world may offer increasing prospects and lowering taxes, and Europe and the USA may therefore suffer a large brain drain.

Politics

If health care is better and cheaper thanks to new tech and becomes less of a political issue; if resources are abundantly available, and the economy is healthy and people feel wealthy enough and resource allocation and wealth distribution become less of a political issue; if the environment is healthy; if global standards of human rights, social welfare and so on are acceptable in most regions and if people are freer to migrate where they want to go; then there may be a little less for countries to fight over. There will be a little less ‘politics’ overall. Most 2050 political arguments and debates will be over social cohesion, culture, generational issues, rights and so on, not health, defence, environment, energy or industry

We know from history that that is no guarantee of peace. People disagree profoundly on a broad range of issues other than life’s basic essentials. I’ve written a few times on the increasing divide and tensions between tribes, especially between left and right. I do think there is a strong chance of civil war in Europe or the USA or both. Social media create reinforcement of views as people expose themselves only to other show think the same, and this creates and reinforces and amplifies an us and them feeling. That is the main ingredient for conflict and rather than seeing that and trying to diffuse it, instead we see left and right becoming ever more entrenched in their views. The current problems we see surrounding Islamic migration show the split extremely well. Each side demonizes the other, extreme camps are growing on both sides and the middle ground is eroding fast. Our leaders only make things worse by refusing to acknowledge and address the issues. I suggested in previous blogs that the second half of the century is when tensions between left and right might result in the Great Western War, but that might well be brought forward a decade or two by a long migration from an unstable Middle East and North Africa, which looks to worsen over the next decade. Internal tensions might build for another decade after that accompanied by a brain drain of the most valuable people, and increasing inter-generational tensions amplifying the left-right divide, with a boil-over in the 2040s. That isn’t to say we won’t see some lesser conflicts before then.

I believe the current tensions between the West, Russia and China will go through occasional ups and downs but the overall trend will be towards far greater stability. I think the chances of a global war will decrease rather than increase. That is just as well since future weapons will be far more capable of course.

So overall, the world peace background will improve markedly, but internal tensions in the West will increase markedly too. The result is that wars between countries or regions will be less likely but the likelihood of civil war in the West will be high.

Robots and AIs

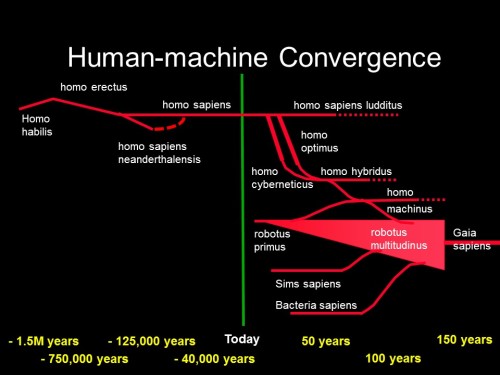

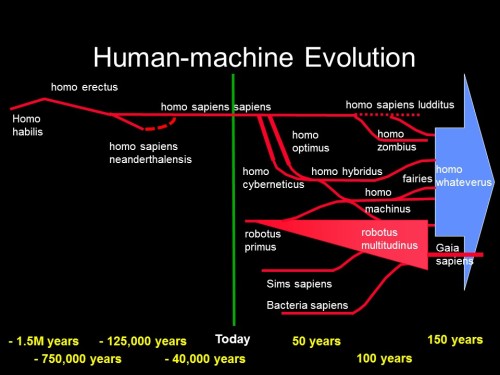

I mentioned robots and AIs in passing in the loneliness section, but they will have strong roles in all areas of life. Many that are thought of simply as machines will act as servants or workers, but many will have advanced levels of AI (not necessarily on board, it could be in the cloud) and people will form emotional bonds with them. Just as important, many such AI/robots will be so advanced that they will have relationships with each other, they will have their own culture. A 21st century version of the debates on slavery is already happening today for sentient AIs even though we don’t have them yet. It is good to be prepared, but we don’t know for sure what such smart and emotional machines will want. They may not want the same as our human prejudices suggest they will, so they will need to be involved in debate and negotiation. It is almost certain that the upper levels of AIs and robots (or androids more likely) will be given some rights, to freedom from pain and abuse, ownership of their own property, a degree of freedom to roam and act of their own accord, the right to pursuit of happiness. They will also get the right to government representation. Which other rights they might get is anyone’s guess, but they will change over time mainly because AIs will evolve and change over time.

OK, I’ve rambled on long enough and I’ve addressed some of the big areas I think. I have ignored a lot more, but it’s dinner time.

A lot of things will be better, some things worse, probably a bit better overall but with the possibility of it all going badly wrong if we don’t get our act together soon. I still think people in 2050 will live in a gilded cage.